I recently found the need to stand up a local instance of ArgoCD. By local I mean running on my personal machine. The primary goal was to learn ArgoCD while testing the deployment of an application. The most challenging part of this effort was presenting a git repo on the host machine to the local ArgoCD instance, which it then monitors for any app config changes.

Here are the overall steps:

- - Establish a local git repo

- - Set up a local K8s cluster

- - Stand up the ArgoCD instance

- - Deploy the application (via git repo)

My Local machine (specs that matter)

OS: MacOS Sonoma

Processor Arch: Apple Silicon (M1, i.e. 64-bit ARM)

Local git repo

On my machine I’m using this as the root directory for all the following configuration: /Users/Parth/Dev/k8s/local-argocd/

Create our foo repo:

cd /Users/Parth/Dev/k8s/local-argocd

mkdir foo-repo

cd foo-repo

git initWe’ll come back to this towards this end.

Local K8s cluster

I’m using Kind to create a basic Kubernetes cluster. You can find an earlier post on installing Kind and other pre-requisites here. This cluster would run both the ArgoCD instance and our test application in separate namespaces.

I used a simple, single node cluster to run both the control plane and worker functions. The reason for this was the simplicity of storage. In a multi-node setup, presenting the same host directory on multiple nodes would be a complexity I didn’t feel was worth undertaking for this exercise.

Cluster configuration:

# /Users/Parth/Dev/k8s/local-argocd/kind-config.yaml

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

name: local-argocd

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "ingress-ready=true"

extraPortMappings:

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

extraMounts:

- hostPath: /Users/Parth/Dev/k8s/local-argocd/foo-repo

containerPath: /tmp/foo-repo- The

kubeadmConfigPatchessection is passing aInitConfigurationto our kind cluster which specifies extra args. These will allow us to set up the ingress that makes the GUI accessible on port 443. - We are presenting the local git repo to the node in our configuration and mounting it to the path

/tmp/foo-repoon the node. Recall that our node is essentially a container.

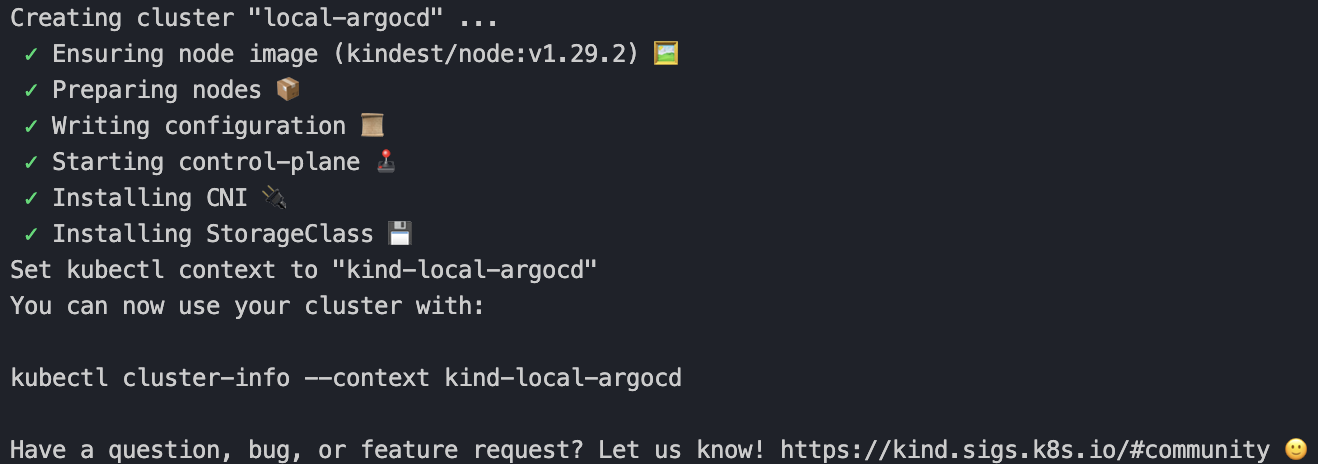

Create the cluster using this config:

kind create cluster --config /Users/Parth/Dev/k8s/local-argocd/kind-config.yaml

Stand up ArgoCD

Deploying ArgoCD on this local cluster is the following three simple commands:

kubectl create namespace argocd

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/kind/deploy.yaml- First, we create the namespace called

argocd - Next, the argocd controller in the created

argocdnamespace - Finally, the nginx controller in the created

argocdnamespace

But if you want the GUI accessible on your local HTTPS port create the following ingress deployment using the command kubectl apply -f /Users/Parth/Dev/k8s/local-argocd/argo-ingress.yaml:

# /Users/Parth/Dev/k8s/local-argocd/argo-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: argocd-server-ingress

namespace: argocd

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

alb.ingress.kubernetes.io/ssl-passthrough: "true"

nginx.ingress.kubernetes.io/force-ssl-redirect: "false"

spec:

rules:

- host: localhost

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: argocd-server

port:

number: 443You should now be able to access the GUI at https://localhost. To get the login password for the GUI (username admin), run:

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d && echoYou would need to select ‘Proceed anyways’ for the cert error since we’ve not signed our cert.

To teardown run the following commands:

kubectl delete -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/kind/deploy.yaml

kubectl -n argocd delete -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

kubectl delete -f /Users/Parth/Dev/k8s/local-argocd/argo-ingress.yaml

kubectl delete namespace argocdYou can stop here if just getting a local ArgoCD server up and running is your goal. Read on for getting the git repo set up.

Deploy an application

Kustomize

In simple terms, Kustomize is a tool that allows you to manipulate Kubernetes resources and objects. For instance, when you use the command kubectl edit deployment foo-deployment, Kustomize is invoked to edit the resource. But it can do more than that as you shall see in a bit.

Here we use Kustomize to manage and modify our resource definitions. Let’s create a few directories under our root directory:

cd /Users/Parth/Dev/k8s/local-argocd

mkdir -p k8s/base

mkdir -p k8s/overlays/devThe base dir will contain resource definitions that Kustomize will manage. overlays on the other hand will contain modifications (patches) to those resources. Under the overlays you can further group by environments leading to different mods for each env.

So far, our directory structure looks like this:

local-argocd

|-- foo-repo

|-- k8s

| |-- base

| |-- overlays/dev

|-- kind-config.yaml

|-- argo-ingress.yamlWe will store all the base configuration, much of what we saw so far, like the ingress definition in here. In addition, we’d define a Kustomize specific file that allows it to manage those resources. Move the argo-ingress.yaml file in here. Next, define the following:

# /Users/Parth/Dev/k8s/local-argocd/k8s/base/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: argocd

resources:

- https://raw.githubusercontent.com/argoproj/argo-cd/master/manifests/install.yaml

- https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/kind/deploy.yaml

- argo-ingress.yamlThe resources section tells Kustomize that we want it to manage the mentioned resources. This includes the controller installation manifests (the individual bash commands we ran earlier) and the ingress deployment.

You might be thinking as to why we need something like Kustomize at all. The reason, as you’ll see next, is otherwise we’d have to modify the upstream manifests we are calling in our resources section - specifically the ArgoCD instance. Which would be a complicated task.

Now that we have our base configuration defined. Let’s declare the changes we’d want to make on top - we want the ArgoCD instance to see the local directory we have mounted on the node. First we start with the file that tells Kustomize what resource to modify and how. Create this file under overlays/dev (Note: This is separate from the kustomization.yaml under base):

# /Users/Parth/Dev/k8s/local-argocd/k8s/overlays/dev/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- ../../base

- my-app.yaml

patches:

- path: local-git-repo.yaml

target:

kind: Deployment

name: argocd-repo-server

namespace: argocdThe resources section tells the resources in scope. The my-app.yaml is our app definition. Pin that aside for now. The patches section tells Kustomize to apply a ‘patch’ to modify our ArgoCD deployment. The path to the patch is in the path field.

Next, define the actual patch:

# /Users/Parth/Dev/k8s/local-argocd/k8s/overlays/dev/local-git-repo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: argocd-repo-server

spec:

template:

spec:

containers:

- name: argocd-repo-server

volumeMounts:

- mountPath: '/tmp/foo-repo' # path on container

name: local-git-repo

readOnly: true

volumes:

- name: local-git-repo

hostPath:

path: '/tmp/foo-repo' # matches containerPath on node

type: DirectoryThis updates the argocd-repo-server container in the ArgoCD deployment, one of the many containers needed to run ArgoCD. This also specifies the host (i.e. the node) path that the container must mount locally (as ReadOnly).

Finally, we define our foo application:

# /Users/Parth/Dev/k8s/local-argocd/k8s/overlays/dev/my-app.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: my-app

namespace: argocd

spec:

project: default

destination:

namespace: my-app

server: https://kubernetes.default.svc

source:

path: apps/test-app # source of manifests

repoURL: 'file:///tmp/foo-repo'

targetRevesion: HEAD

syncPolicy:

# Enables auto-sync

# automated: {}

automated:

prune: true

syncOptions:

- CreateNamespace=trueOur directory structure looks like this:

local-argocd

|-- foo-repo

|-- k8s

| |-- base

| | |-- argo-ingress.yaml

| | |-- kustomization.yaml

| |-- overlays/dev

| |-- kustomization.yaml

| |-- local-git-repo.yaml

| |-- my-app.yaml

|-- kind-config.yamlDummy application

We’re almost there, hang in there a bit longer.

I’m going to use Hashicorp’s http-echo docker image as our application. To see ArgoCD in action, we’ll iterate over a few versions of this image starting with v0.2.3. Go ahead and add the deployment and service definitions.

Deployment:

# /Users/Parth/Dev/k8s/local-argocd/foo-repo/apps/test-app/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dummy-app

spec:

replicas: 1

selector:

matchLabels:

app: nginx-dummy

template:

metadata:

labels:

app: nginx-dummy

spec:

containers:

- image: hashicorp/http-echo:0.2.3

name: nginx-dummy

args:

- "-text=foo"

ports:

- containerPort: 80Service:

# /Users/Parth/Dev/k8s/local-argocd/foo-repo/apps/test-app/svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-dummy-svc

spec:

ports:

- port: 80

targetPort: 80

selector:

app: nginx-dummyMake sure you do a git add and git commit to our foo repo.

With this, deploying ArgoCD along with our app, essentially becomes the following commands:

kubectl create -k /Users/Parth/Dev/k8s/local-argocd/k8s/overlays/devNote You might see an error like below:

error: resource mapping not found for name: "my-app" namespace: "argocd" from "k8s/overlays/dev": no matches for kind "Application" in version "argoproj.io/v1alpha1"

ensure CRDs are installed firstRun kubectl apply -k /Users/Parth/Dev/k8s/local-argocd/k8s/overlays/dev and that should fix it.

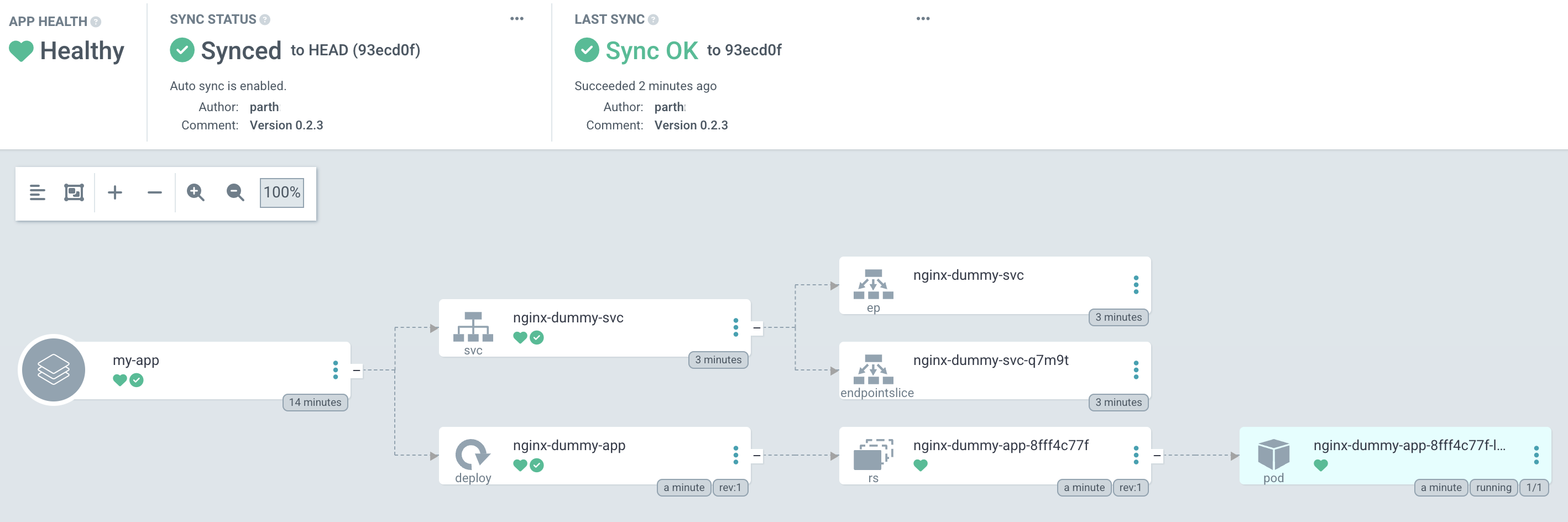

You should now see that ArgoCD picked up our manifests and has started deploying it:

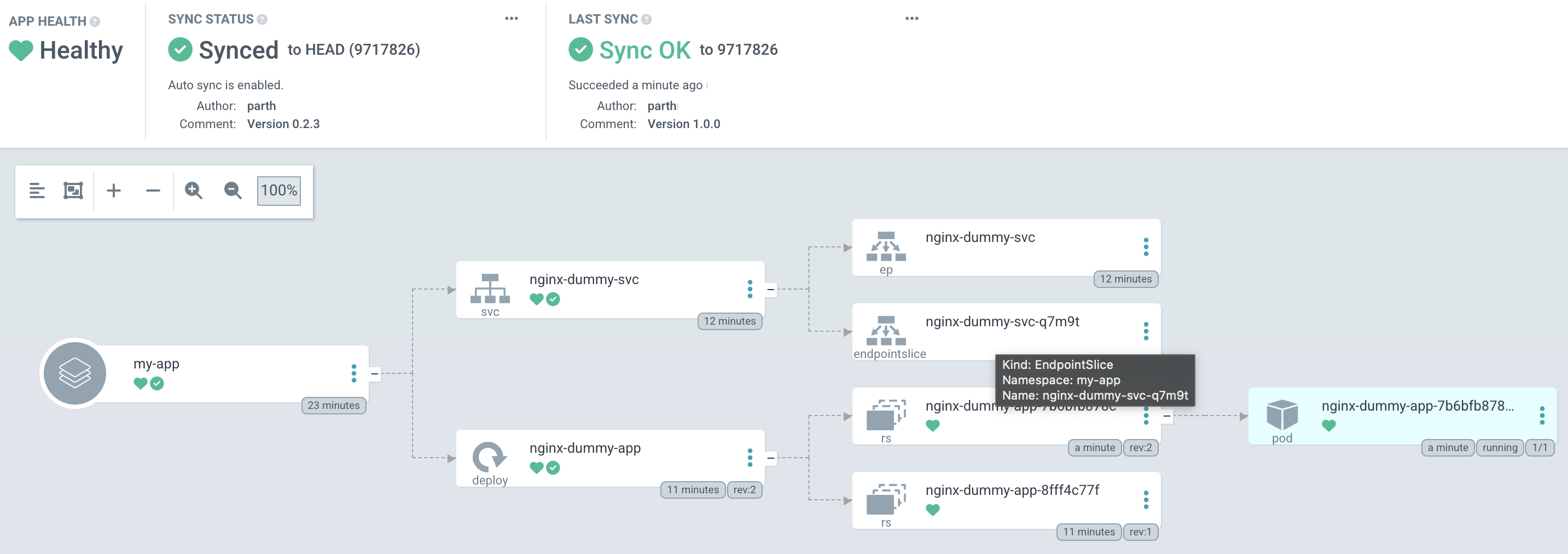

Now lets bump up the version. Update the image from 0.2.3 to 1.0.0. You may have to hit the refresh button to see the effect: our current app pod gets terminated and a new one with the latest version gets created.

Finally, to tear everything down:

kubectl delete -k /Users/Parth/Dev/k8s/local-argocd/k8s/overlays/dev